I took part in an excellent Seminar on Measuring the Effect of Cultural Policy, organised by Nordic Culture Point in November 2013. The presentations highlighted how measuring culture is a complex affair, and cannot be simplified into crude numbers. It’s notable that most of the presenters in the seminar were economists and/or statisticians.

Here are some glimpses of the presentations:

In his introduction talk, Mikael Schultz set off with an example:

QUESTION: WHAT IS THE MEANING OF LIFE?

ANSWER: 42

It might very well be that the true answer to meaning of life is in fact 42. The problem is that we don’t know how to interpret this answer. The same goes for all numeric values – if they are used in isolation, they do not actually properly measure any quality. As noted in the event by Jaakko Hämeen-Anttila, since Aristotle we have known that it’s impossible to measure quality by quantitative means.

Here are a couple of slides that further illustrate the same point:

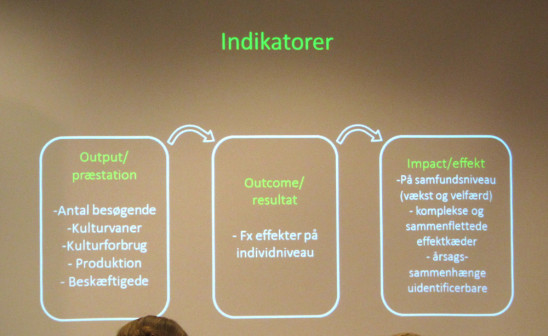

This slide is from the presentation by Clas-Uno Frykholm.

When thinking about evaluating culture, one needs to make a difference between three aspects:

– Output: Concrete outcomes such as produced artworks, or events with specific amount of participants, etc

– Outcome: The direct effect that the this cultural project has on individual people

– Impact: The impact that the project has in the society

In the end, it’s the impact is what matters. And impact is a complex issue, as illustrated by this graph:

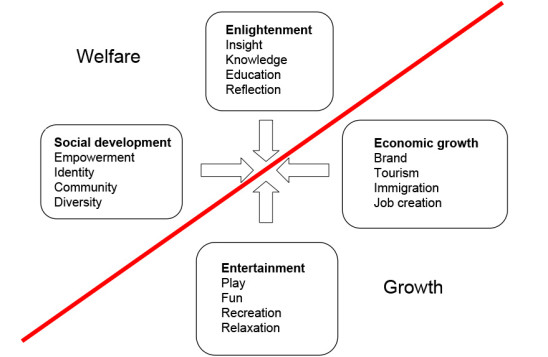

This graph was shown by Trine Bille, referring to research by Dorte Skot-Hansen. I found this same image from this slideset and translated the terms to English. At least these four different sets of issues should be taken into account if one wants to gain some understanding about the value of a specific cultural project.

Pingback: New Culture vs Old Structures

Some discussion on Facebook: https://www.facebook.com/juha.m.huuskonen/posts/10152568013444388